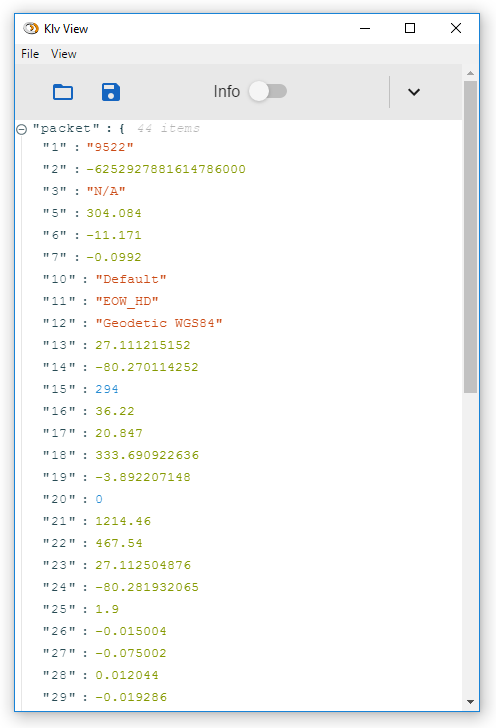

Other approachesĪs always, there are other solutions you can try: When it comes to memory usage, problem solved!Īnd as far as runtime performance goes, the streaming/chunked solution with ijson actually runs slightly faster, though this won’t necessarily be the case for other datasets or algorithms. Here’s what memory usage looks like with this approach: In this case, "item" just means “each item in the top-level list we’re iterating over” see the ijson documentation for more details. The items() API takes a query string that tells you which part of the record to return. With this API the file has to stay open because the JSON parser is reading from the file on demand, as we iterate over the records.

In the previous version, using the standard library, once the data is loaded we no longer to keep the file open.

items ( f, "item" ): user = record repo = record if user not in user_to_repos : user_to_repos = set () user_to_repos. Import ijson user_to_repos = with open ( "large-file.json", "rb" ) as f : for record in ijson. There are a number of Python libraries that support this style of JSON parsing in the following example, I used the ijson library.

Whatever term you want to describe this approach-streaming, iterative parsing, chunking, or reading on-demand-it means we can reduce memory usage to: We can process the records one at a time. The resulting API would probably allow processing the objects one at a time.Īnd if we look at the algorithm we want to run, that’s just fine the algorithm does not require all the data be loaded into memory at once. Given a JSON file that’s structured as a list of objects, we could in theory parse it one chunk at a time instead of all at once. With a larger file, it would be impossible to load at all. It’s clear that loading the whole JSON file into memory is a waste of memory. If you look at our large JSON file, it contains characters that don’t fit in ASCII.īecause it’s loaded as one giant string, that whole giant string uses a less efficient memory representation. Notice how all 3 strings are 1000 characters long, but they use different amounts of memory depending on which characters they contain. We can see how much memory an object needs using sys.getsizeof(): If the string uses more extended characters, it might end up using as many as 4 bytes per character. Then, if the string can be represented as ASCII, only one byte of memory is used per character. Python’s string representation is optimized to use less memory, depending on what the string contents are.įirst, every string has a fixed overhead. Why is that? A brief digression: Python’s string memory representation Once we load it into memory and decode it into a text (Unicode) Python string, it takes far more than 24MB. That’s why actual profiling is so helpful in reducing memory usage and speeding up your software: the real bottlenecks might not be obvious.Įven if loading the file is the bottleneck, that still raises some questions. However, in this case they don’t show up at all, probably because peak memory is dominated by loading the file and decoding it from bytes to Unicode. In addition, there should be some usage from creating the Python objects. So that’s one problem: just loading the file will take a lot of memory. Happy Learning! See you again.Def load ( fp, *, cls = None, object_hook = None, parse_float = None, parse_int = None, parse_constant = None, object_pairs_hook = None, ** kw ): """Deserialize ``fp`` (a ``.read()``-supporting file-like object containing

Kotlin – Convert object to/from JSON string using Gson Conclusion

JSON READER DISK MAP CODE

In the code above, we use omJson() method to parse JSON string to List.įor more details about ways to parse JSON to Data Class object, to Array or Map, please visit: Person(name=bezkoder Master, age=30, messages=)

0 kommentar(er)

0 kommentar(er)